To understand what the application’s CPU threads are doing outside of CUDA function calls, you can use the NVIDIA Tools Extension API (NVTX). The Visual Profiler shows these calls in the Timeline View, allowing you to see where each CPU thread in the application is invoking CUDA functions. The Visual Profiler can collect a trace of the CUDA function calls made by your application. For the Visual Profiler you use the Start execution with profiling enabled checkbox in the Settings View. For nvprof you do this with the -profile-from-start off flag. When using the start and stop functions, you also need to instruct the profiling tool to disable profiling at the start of the application.

#Cudalaunch nvprof driver

To use these functions you must include cuda_profiler_api.h (or cudaProfiler.h for the driver API). cudaProfilerStart() is used to start profiling and cudaProfilerStop() is used to stop profiling (using the CUDA driver API, you get the same functionality with cuProfilerStart() and cuProfilerStop()). To limit profiling to a region of your application, CUDA provides functions to start and stop profile data collection. In this case you can collect profile data from a subset of the iterations.

The application contains algorithms that operate over a large number of iterations, but the performance of the algorithm does not vary significantly across those iterations. When the performance of each phase of the application can be optimized independently of the others, you want to profile each phase separately to focus your optimization efforts. The application operates in phases, where a different set of algorithms is active in each phase.

#Cudalaunch nvprof code

When profiling, you want to collect profile data for the CUDA functions implementing the algorithm, but not for the test harness code that initializes the data or checks the results. Using a test harness is a common and productive way to quickly iterate and test algorithm changes. The test harness initializes the data, invokes the CUDA functions to perform the algorithm, and then checks the results for correctness.

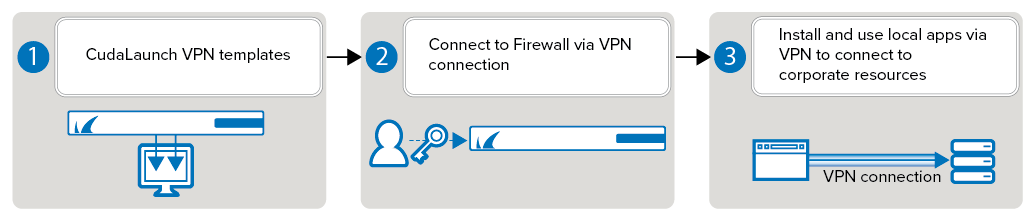

The application is a test harness that contains a CUDA implementation of all or part of your algorithm. There are several common situations where profiling a region of the application is helpful. Limiting profiling to performance-critical regions reduces the amount of profile data that both you and the tools must process, and focuses attention on the code where optimization will result in the greatest performance gains. But, as explained below, you typically only want to profile the region(s) of your application containing some or all of the performance-critical code. Focused Profiling īy default, the profiling tools collect profile data over the entire run of your application. This section describes these modifications and how they can improve your profiling results. The CUDA profiling tools do not require any application changes to enable profiling however, by making some simple modifications and additions, you can greatly increase the usability and effectiveness profiling. You can also refer to the metrics reference. To see a list of all available metrics on a particular NVIDIA GPU, type nvprof -query-metrics. To see a list of all available events on a particular NVIDIA GPU, type nvprof -query-events.Ī metric is a characteristic of an application that is calculated from one or more event values. It corresponds to a single hardware counter value which is collected during kernel execution. Refer the Migrating to Nsight Tools from Visual Profiler and nvprof section for more details.Īn event is a countable activity, action, or occurrence on a device. It is recommended to use next-generation tools NVIDIA Nsight Systems for GPU and CPU sampling and tracing and NVIDIA Nsight Compute for GPU kernel profiling. The NVIDIA Volta platform is the last architecture on which these tools are fully supported. Note that Visual Profiler and nvprof will be deprecated in a future CUDA release. The nvprof profiling tool enables you to collect and view profiling data from the command-line. The Visual Profiler is a graphical profiling tool that displays a timeline of your application’s CPU and GPU activity, and that includes an automated analysis engine to identify optimization opportunities. This document describes NVIDIA profiling tools that enable you to understand and optimize the performance of your CUDA, OpenACC or OpenMP applications.

#Cudalaunch nvprof manual

The user manual for NVIDIA profiling tools for optimizing performance of CUDA applications. Migrating to Nsight Tools from Visual Profiler and nvprof Viewing nvprof MPS timeline in Visual Profiler

0 kommentar(er)

0 kommentar(er)